LouKI7: Part One

The Idea

Chess engines have exceeded the expertise of grand masters some time ago. In 2016, DeepMind’s AlphaGo did the same with the significantly more complex Chinese board game Go. Other AI’s have conquered the video games sector. AlphaStar can compete against the best player in Blizzard’s strategy game StarCraft 2. OpenAI Five achieved top-level performance at Dota 2. In which a team of 5 players combine their abilities to win against the opposing team.

Creating our own Artifical Intelligence

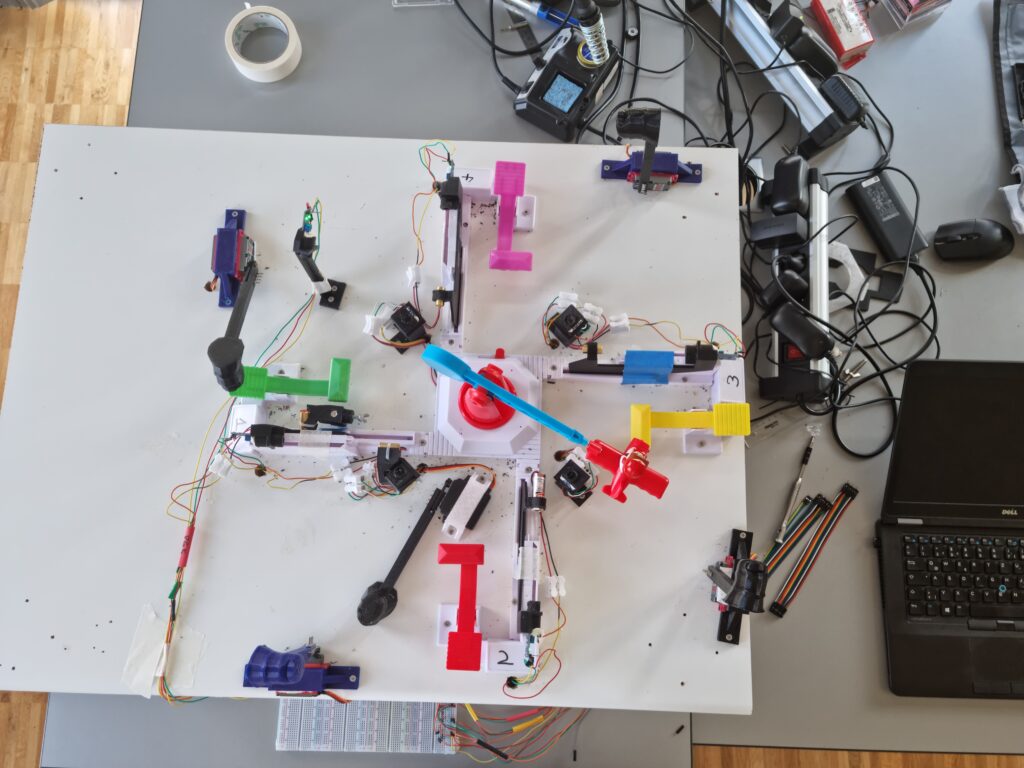

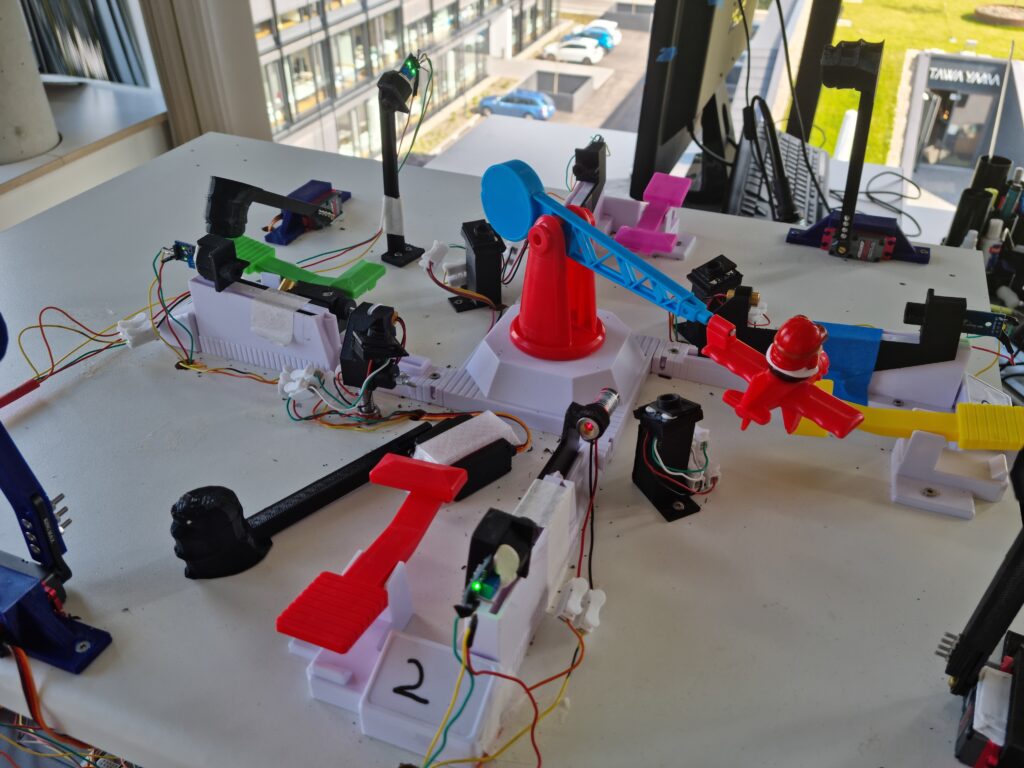

We at virtual7 thought of pushing the envelope and developing our own AI for the next frontier of non-human prowess. A bot that would master the game of Looping Louie. Okay, maybe that sounded a bit too pompous. But creating an AI for this interactive game, beloved among children and students alike, still sounds like a fun challenge. And keep in mind, that this is a non-virtual game. Unlike in Starcraft or Dota, a real-world entity will have to play this game. For Chess and Go, a human can act as the physical extension and execute the moves proposed by the AI. In Looping Louie, timing and the right exertion of force are key. To play the moves by our artificial overlord, some kind of robot, with the ability to follow the instructions precisely, will have to do the trick.

This series aims at documenting the development of this real-world AI. Part One will cover basic concepts of how an AI learns and illustrates the steps towards the first attempt. The next part will be a comprehensive deep dive into the theory of the learning algorithm. Part Three talks about improving learning results with the help of a simulation. The evolution of the setup is covered in part Four. Lastly, part Five will showcase the frontend, which has been specifically designed for this project. This will give the robot a more human feel and show live game statistics.

Artificial Intelligence

What are we actually talking about here?

Artificial Intelligence (AI) is one of these buzzwords being thrown around a lot lately, but what does it mean? Though there are quite a several different definitions floating around we will stick to the Oxford dictionary’s answers:.

“Artificial intelligence is the study and development of computer systems that copy intelligent human behavior.”

That sounds fair enough and is easily applied to our problem at hand. What we want, is a robot, that can play Looping Louie as a human would. Preferably, it should exceed human performance.

Looking deeper at some of the examples of AI already given (Bots for Chess, Go, and various video games). One quickly realizes that those AI’s were trained using a technique called Reinforcement Learning (RL). This is a subgroup of so-called machine learning algorithms. What exactly makes them a member of the RL family? In order to answer this, we will have to introduce a minimum of nomenclature.

The Artificial Chess Player

Let’s say, we were trying to build an AI playing chess. The artificial chess player that we want to train is called the Agent. The agent determines what move to play next. But how does he know the best next move or even know the set of possible moves? The latter is addressed with the concept of an Environment. A chess Environment would represent the current state of the game. This includes the information about the position of each piece on the board.

It will further hand the agent the set of all possible moves. The agent can then choose one of these moves, in this particular game state. To understand which move was good or bad, the agent receives feedback for each of his actions – the so-called Reward. Choosing the right reward can be a tricky problem. For the sake of simplifying things, we assume this problem has already been addressed and the reward here is a measure of how much the move contributed to winning the game in the end.

The Learning Process

The learning process now looks like this (compare !RLLoop): The agent is given the state of the environment. Based on this state, the agent chooses an action (one possible move of a piece). This action changes the state of the game by itself. Further, it will elicit the opponent to respond with his move. The action and its impact on the new game state are rewarded according to their merit. Then the cycle starts again. When the agent is given the new state and chooses the next action, which again is rewarded, and so on. This procedure is repeated until the game ends and the agent either won or lost. By rewarding the agent according to the quality of its action, it can gradually become better.

With the terminology introduced and the basic learning principle in mind, the implementation for our Looping Louie AI can start.

Get to know more about LouKI7! Learn how the game is played and what we did to realize the setup in the second part.

- The LouKI7 Environment - 17. Juni 2022

- Artifical Intelligence with Big Role Models - 10. Juni 2022