Naming

Let’s start by giving our Agent a name and call it LouKI7 (a rather ingenious wordplay, alluding to game, company, and AI (KI = AI in German) all at once!). The world inhabited by LouKI7 is its Environment and the Environment is the game, Looping Louie.

<Interlude> The Game

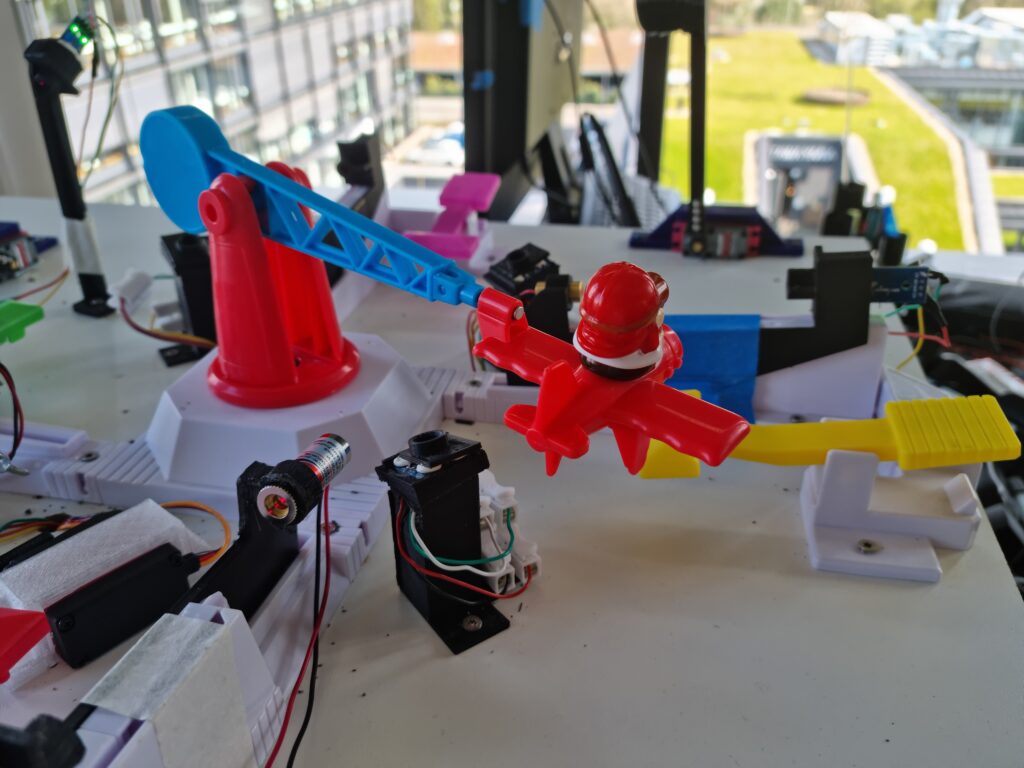

A quick reminder of the rules: Looping Louie is a 4-player game. Mr. Louie (the flying little man in the plane) is cruising in a circular motion, and unless pushed upwards through player intervention (by hitting on one end of a seesaw, while the other end hits Mr. Louie) will hit the player’s coins located immediately behind the player’s seesaw. Each player has three coins, and if all coins are hit by Mr. Louie the respective player drops out of the game. Propelling Mr. Louie into the air is of course not only done in self-defense but also as a means of attacking other players.

<\Interlude>

So how can we adequately represent the state of the environment? Firstly we will have to have a way of determining how many coins each player has. That way, we can give LouKI7 feedback, when he loses coins (bad) or when he managed to successfully attack another player (good). Secondly, human players see Mr. Louie’s approach and can react accordingly. In order to give LouKI7 a chance to make a similarly informed choice on what action to take, the trajectory of Mr. Louie has to be measured in some way or another.

Training Approach

Using actual coins during the training process is not a viable approach. We want to be able to play a lot of games in a row without having to place the coins back to where they have been before they were kicked down by Mr. Louie before the start of each game. One possible solution is to discard the coins altogether and instead detect flybys that would have hit the coin of the respective player. We implemented this solution by building laser gates that will trigger an event when the laser’s path is intercepted by Mr. Louie (!Lasergate). Measuring the trajectory of Mr. Louie is a more demanding task. Placing distance sensors on top of the moving platform, though possible, is difficult to pull off. The sensor has to be connected somehow, but how to connect the cables on the ever-turning platform?

Measurements

Saving this kind of approach for another day, we decided to place four distance measurement sensors on the ground, looking upwards and detecting flyovers (compare !SchemaSetup). The measurement rate of these sensors is high enough to yield about five measurement points per flyover. Thus we have about 20 measurement points from four flyovers. Obviously, this kind of trajectory resolution is far from perfect, but placing the sensors at valuable positions will still give a useful description of the overall trajectory. Additionally, since we get multiple distance measurements per flyby, this also allows for some inference of the general direction (up or down) and lateral velocity of Mr. Louie.

That’s it for the Environment of our agent. With the help of the laser gates, LouKI7 will know the score of the game, and with the help of the distance measurements, it will have an idea of where Mr. Louie is about to be next.

Setup

We already know that the physical setup has to include four distance sensors and four laser gates. But how does our LouKI7 execute the actions? We need an artificial hand, that presses the one end of the seesaw at the appropriate time. For that purpose, a 3D-printed hammer is connected to a servomotor and we call it LouKI7-robot (!Hammer). Add three more hammers (so that the AI can have three sparring partners) and an additional laser gate close to the LouKI7-robot, that gathers information about whether Mr. Louie was hit or not (!PictureSetup).

All sensors and servo motors are connected to a Raspberry Pi, that acts as the glue between software and hardware. All sensory data is collected and processed by the Pi. In this way, a digital representation of the Environment is built. This also includes the implementation of the game rules and the integration of the algorithm, that progressively trains LouKI7.

Enough for this part. We mostly covered some Reinforcement Learning basics and the construction of the Environment. Besides the Environment, the other important puzzle piece is the learning algorithm. The interplay of these two will be explained in more detail in part three, where we take a look at simulating the whole game virtually. But before that, it is useful to attain some understanding of the learning algorithm itself, which will be the topic of the next part of this series.

- The LouKI7 Environment - 17. Juni 2022

- Artifical Intelligence with Big Role Models - 10. Juni 2022